Table of Contents

Background

- A deepfake video featuring a cloned voice of Harris falsely claimed that President Biden is “senile” and labelled her a “diversity hire.”

- This video was shared by Elon Musk which amplified its reach despite its fabricated nature.

- Such content not only violates personal privacy but also undermines the dignity of women in politics.

| Is this instance an isolated case? |

NO,

|

What are the challenges?

- Gendered Nature of Abuse: While male leaders are occasionally targeted with misinformation about their policies, women leaders are objectified and face body-shaming and sexually explicit attacks.

- Illusion of Empowerment: Although technology is celebrated for empowering women, AI and digital platforms often reflect societal biases.

- AI, largely developed by male-dominated teams, lacks inclusivity and may reinforce existing stereotypes and prejudices rather than challenge them, exacerbating risks of digital abuse against women.

- Safe Harbour Protection: Big tech companies often evade accountability by claiming that their platforms merely reflect user-generated content.

- This means they don’t create the content themselves but simply allow users to share their views and media, with the platforms functioning as passive hosts.

- This reasoning relies on the concept of “safe harbour” protections – legal provisions that shield these platforms from liability for user content as long as they aren’t directly involved in creating or promoting it.

- Limiting Women’s Participation: For many women, digital harassment leads to reduced use of technology, with some families restricting women’s device access, thus hindering their professional and public lives.

Recommendations for Improvement

To combat these challenges, several measures are suggested:

- Enhanced Content Moderation: Social media platforms should prioritise hiring diverse moderation teams to identify and remove harmful content swiftly.

- Accountability Measures: Tech companies must be held accountable for the spread of misinformation and deepfakes on their platforms.

- Effective policies such as hefty fines and temporary restrictions on platforms are necessary.

- g., Giorgia Meloni exemplifies this approach by seeking €100,000 in damages for a deepfake that misrepresented her.

- Increased Female Representation: Encouraging more women to participate in tech development can help create more inclusive technologies.

- Proactive Reporting Mechanisms: Users should be empowered to report abusive content without facing additional burdens.

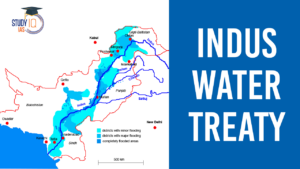

Indus Water Treaty 1960 Suspended by Ind...

Indus Water Treaty 1960 Suspended by Ind...

5 Years of SVAMITVA Scheme and Its Benef...

5 Years of SVAMITVA Scheme and Its Benef...

Places in News for UPSC 2025 for Prelims...

Places in News for UPSC 2025 for Prelims...