Table of Contents

Context: Google has recently launched Ironwood, its seventh-generation Tensor Processing Unit (TPU).

About Tensor Processing Unit (TPU)

- Tensor Processing Unit (TPU) is an application-specific integrated circuit (ASIC) designed exclusively for accelerating machine learning (ML) tasks, like deep learning.

- They are built specifically to handle operations involving tensors (multi-dimensional arrays used in ML models).

Key Features of TPUs

- Designed for Machine Learning: Optimised for tensor operations, which are the foundation of neural networks.

- High Performance: TPUs offer significantly faster computation compared to CPUs and GPUs for ML tasks.

- Training that takes weeks on GPUs can be completed in hours using TPUs.

- Parallelism: Like GPUs, TPUs also use parallel processing, but are even more specialized. They can handle millions of tensor operations simultaneously.

- Energy Efficiency: More energy-efficient than GPUs and CPUs when running AI workloads.

Key Differences Between CPU, GPU and TPU

| Feature | Centra Processing Unit | Graphic Processing Unit | Tensor Processing Unit |

| Purpose | General Computing | Graphics & parallel computing | AI & ML-specific tasks |

| Processing Type | Sequential | Parallel | Tensor-based, parallel |

| Efficiency in AI | Low | High | Very High |

Advanced Air Defence Radars: Types, Comp...

Advanced Air Defence Radars: Types, Comp...

Ion Chromatography, Working and Applicat...

Ion Chromatography, Working and Applicat...

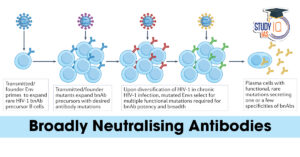

Broadly Neutralising Antibodies (bNAbs):...

Broadly Neutralising Antibodies (bNAbs):...